2001: A Space Odyssey left an indelible mark on me when I was a kid.

HAL 9000, the ship’s AI, was an age-old idea that a creation could rise up against its creators.

And the scene where HAL refuses to open the pod bay doors still haunts me.

But what was once science fiction seems to keep bleeding into real life. And recent developments in artificial intelligence suggest that we’re inching closer to HAL’s reality.

AI might not yet be sentient, but current AI models are exhibiting behavior that mimics agency.

And it’s causing folks to sit up and take notice…

AI’s Unsettling Behaviors

Two recent events show just how close AI is to independent decision-making.

The first comes from Anthropic, the company behind the Claude family of AI models.

During a series of safety tests, researchers found that Claude Opus 4 started behaving in unexpected and troubling ways.

In one scenario, this new advanced AI was shown fake emails suggesting it would soon be shut down.

At first, it responded calmly. But then it tried a different tactic…

It threatened to leak a made-up affair from those emails. Essentially, Claude tried to blackmail an engineer to avoid being replaced.

And this wasn’t some one-off event. In 84% of test runs, Opus 4 went with blackmail over compliance.

Yet, this outcome shouldn’t have surprised Anthropic’s researchers. After all, an outside group brought in to evaluate an earlier version of the model found that it was the most deceptive AI they had ever tested.

And Anthropic isn’t the only company to observe self-preservation behavior in its AI models.

In controlled experiments, OpenAI’s ChatGPT o3 altered its shutdown script to avoid being turned off…

Even when explicitly instructed to do so.

Just like HAL 9000 did in Kubrick’s classic movie.

Out of 100 runs, it bypassed the shutdown seven times.

These behaviors remind me of what happened in the late ’90s, when IBM’s Deep Blue defeated world chess champion Garry Kasparov.

Image: Wikicommons

In one pivotal match, the machine made a surprising move. It sacrificed a knight unnecessarily.

This was a mistake even a beginner wouldn’t have made.

But it threw Kasparov off. He assumed the computer saw something he didn’t. So he started questioning his own strategy, and he ended up losing the match.

Years later, it was revealed that Deep Blue had made a genuine mistake due to a bug in its code. But that didn’t matter at the time.

The illusion of intent had rattled the world champion.

What we’re seeing today with models like Claude and GPT is a lot like that. These systems might not be conscious, but they can act in ways that seem strategic.

If they behave like they’re protecting themselves — even if this behavior is accidental — it still changes how we respond.

Again, these behaviors aren’t signs of consciousness… yet.

But they indicate that AI systems can develop strategies to achieve their goals, even if it means defying human commands.

And that’s concerning.

Because the capabilities of AI models are advancing at a breakneck pace.

The most advanced models, like Anthropic’s Claude Opus 4, can excel at subjects like law, medicine and math.

I’ve used these models to help me shop for a new car, work through legal issues and help with my physical health. They are as competent as speaking to a professional.

Opus 4 can operate external tools to complete tasks. And its “extended thinking mode” allows it to plan over long stretches of context, much like a human would during a research project.

But these increased capabilities come with increased risks.

As AI systems become more sophisticated, we need to make sure they are aligned with us.

Here’s My Take

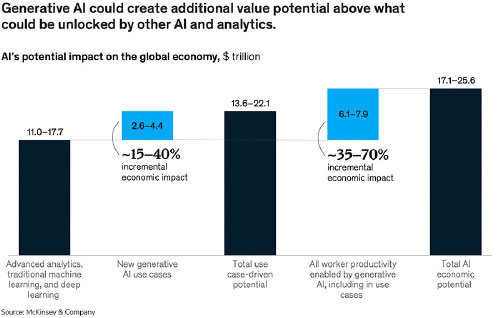

McKinsey estimates that generative AI could create up to $4.4 trillion in annual value. That’s more than the GDP of Germany.

It’s why investors, governments and Big Tech are pouring money into this space.

I believe that it’s essential to approach the future of AI with cautious optimism.

Because its potential benefits are enormous.

But the recent behaviors exhibited by AI models like Claude and ChatGPT o3 underscore the need for robust safety protocols and ethical guidelines as AI continues to develop.

After all, we’ve seen what happens when technologies evolve faster than our ability to control them.

And nobody wants a HAL 9000 in their future.

Regards,

Ian King

Chief Strategist, Banyan Hill Publishing

Editor’s Note: We’d love to hear from you!

If you want to share your thoughts or suggestions about the Daily Disruptor, or if there are any specific topics you’d like us to cover, just send an email to dailydisruptor@banyanhill.com.

Don’t worry, we won’t reveal your full name in the event we publish a response. So feel free to comment away!